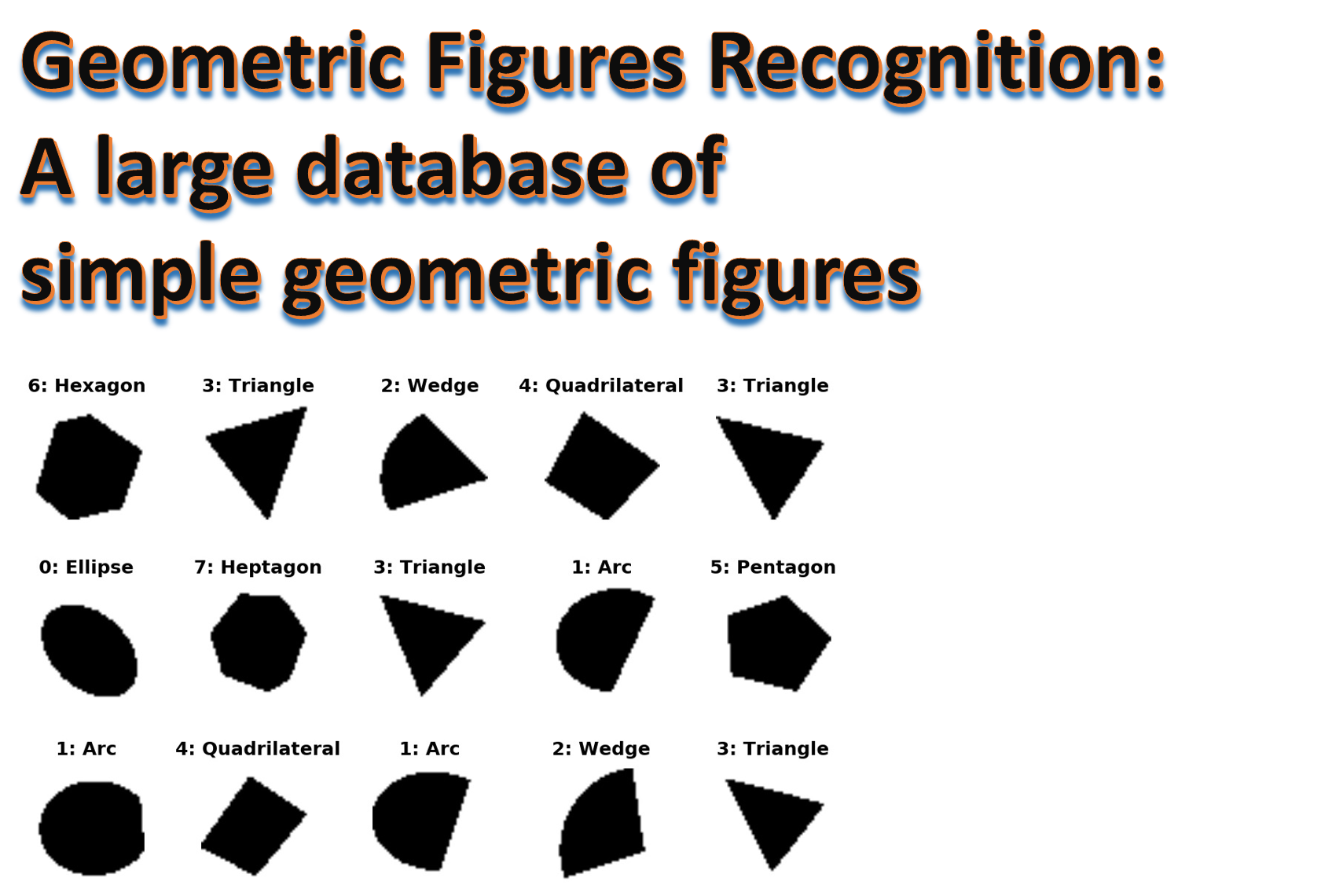

Geofig is a large data set of simple 48x48 pixels black and white geometrical shapes. It consists of 8 shape types:

0: Ellipse

1: Arc

2: Wedge

3: Triangle

4: Quadrilateral

5: Pentagon

6: Hexagon

7: Heptagon

They were generated by an automatic Python script with randomization on shapes. It was designed to be as simple as possible in order to serve as a first clean example for deep learning courses, introductory tutorials, and first course projects. We used grayscale 48x48 pixels images, so that it can be processed by standard pc systems with modest computing resources.

It is also intended to serve as a clear and simple minded data set for benchmarking deep learning libraries and deep learning hardware (like GPU systems). As far as we tried within the Keras library, achieving high accuracy prediction scores does require a non-trivial effort and compute time. So it does make a good challenge for tutorials and course projects for image recognition.

The GeoFig data set consists of 4 HDF5 files, each contains 80,000 48x48 pixels black/white images. So in total, we have 320,000 48x48 black/white images. We believe that 20K images are enough for training, so you may need to download only the first set. But in case you need more images, you have 300K more to choose from. All the data sets are balanced. That is they contain equal numbers of shapes from each group. And of course, there are no duplications! All the 320K images are unique.

- http://www.samyzaf.com/ML/geofig/geofig1.h5.zip

- http://www.samyzaf.com/ML/geofig/geofig2.h5.zip

- http://www.samyzaf.com/ML/geofig/geofig3.h5.zip

- http://www.samyzaf.com/ML/geofig/geofig4.h5.zip

You will need to install the h5py Python module. Reading and writing HDF5 files can be easily learned from the following tutorial: https://www.getdatajoy.com/learn/Read_and_Write_HDF5_from_Python

Prerequisites¶

The code for this IPython notebook was tested on Windows 10, Python 2.7 with keras, numpy, matplotlib and jupyter. The deep learning hardware we used was an NVIDIA GPU (GeForce/GTX950) with cuDNN version 5103. Of course, it can also be run on a traditional CPU but it will be significantly slower (not recommended!).

To run the code in this notebook, you will need to download a few course libraries

which we use in other examples of this course.

They can be downloaded in one zip file from my Github repository:

https://github.com/samyzaf/kerutils

You can also view and download each file individually:

- http://www.samyzaf.com/cgi-bin/view_file.py?file=ML/lib/kerutils.py

- http://www.samyzaf.com/cgi-bin/view_file.py?file=ML/lib/dlutils.py

- http://www.samyzaf.com/cgi-bin/view_file.py?file=ML/lib/imgutils.py

- http://www.samyzaf.com/cgi-bin/view_file.py?file=ML/lib/progmeter.py

- http://www.samyzaf.com/cgi-bin/view_file.py?file=ML/style-notebook.css (notebook stylesheet)

Here are the Python modules and basic definitions we need for an example of how to use the GeoFig data set

from keras.datasets import cifar10

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Sequential, load_model

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Convolution2D, MaxPooling2D, AveragePooling2D, ZeroPadding2D

from keras.optimizers import SGD

from keras.constraints import maxnorm

from keras.utils import np_utils

from keras.layers.advanced_activations import SReLU, ELU, LeakyReLU

from keras.utils.visualize_util import plot

from keras.layers.noise import GaussianNoise

import matplotlib.pyplot as plt

import matplotlib.cm

from matplotlib import rcParams

from kerutils import *

from imgutils import *

%matplotlib inline

class_name = {

0: 'Ellipse',

1: 'Arc',

2: 'Wedge',

3: 'Triangle',

4: 'Quadrilateral',

5: 'Pentagon',

6: 'Hexagon',

7: 'Heptagon',

}

nb_classes = len(class_name) # Number of features

classes = range(nb_classes) # List of features (as integers)

# These are css/html styles for good looking ipython notebooks

from IPython.core.display import HTML

css = open('style-notebook.css').read()

HTML('<style>{}</style>'.format(css));

Preparing training and validation data sets¶

The archived data sets above are too large for early experimentation, so we suggest that you start with a smaller data sets first, and later increase their size if needed.

The imgutils module (see above) contains several utilities for manipulating HDF5 files. The function save_h5_from_file can be used to extract a subset of images to an HDF5 file from a larger HDF5. It accepts three arguments:

- source HDF5 pool of images

- target HDF5 file (for saving the subset)

- Class size: how many images in each shape class. We have 8 shape classes, so the total number of shapes in the subset file should be 8 times larger.

save_h5_from_file("geofig1.h5", "train.h5", 1000)

save_h5_from_file("geofig2.h5", "test.h5", 200)

Note that we made sure the training and validation data are disjoint (no common images) by extracting the from two different pools.

Load training and test data¶

The imgutils module also contains a utility load_data for loading HDF5 files to memory (as Numpy arrays). This method accepts the names of your training and validation data set files, and it returns the following six Numpy arrays:

- X_train: an array of 8000 images whose shape is 8000x48x48.

- y_train: a one dimensional array of 8000 integers representing the class of each image in X_train.

- Y_train: an 8000 array of one-hot vectors needed for Keras model. For more details see: http://stackoverflow.com/questions/29831489/numpy-1-hot-array

- X_test: an array of 1000 validation images (1000x48x48)

- y_test: validation class array

- Y_test: one-hot vectors for the validation samples

It should be noted that in additional to reading the images from the HDF5 file, the load_data method also performs some normalization of the image data like scaling it to a unit interval and centering it around the mean value. You can control these actions by additional optional options of this command. Please look at the source code to learn more.

X_train, y_train, Y_train, X_test, y_test, Y_test = load_data('train.h5', 'test.h5')

print('X_train shape:', X_train.shape)

print(X_train.shape[0], 'training samples')

print(X_test.shape[0], 'validation samples')

Let's also write two small utilities for drawing samples of images, so we can inspect our results visually.

def draw_image(img, id):

img = img.reshape(48,48)

plt.imshow(img, cmap='gray', interpolation='none')

plt.title("%d: %s" % (id, class_name[id]), fontsize=15, fontweight='bold', y=1.08)

plt.axis('off')

plt.show()

Let's draw image 18 in the X_train array as example

draw_image(X_train[18], y_train[18])

As we can see, the image is a bit blurry due to the normalization procedures that the load_data method has done to the original data. If you want to draw the raw data as it is in the HDF5 file, use the h5_get method to extract the raw image from the HDF5 file directly:

img = h5_get('train.h5', 'img_19') # images in h5 files are numbered from 1

id = y_train[18]

draw_image(img, id)

Sometimes we want to inspect a larger group of images in parallel, so we also provide a method for drawing a grid of consecutive images.

def draw_sample(X, y, n, rows=4, cols=4, imfile=None, fontsize=9):

for i in range(0, rows*cols):

plt.subplot(rows, cols, i+1)

img = X[n+i].reshape(48,48)

plt.imshow(img, cmap='gray', interpolation='none')

id = y[n+i]

plt.title("%d: %s" % (id, class_name[id]), fontsize=fontsize, y=1.08)

plt.axis('off')

plt.subplots_adjust(wspace=0.8, hspace=0.1)

draw_sample(X_train, y_train, 400, 3, 5)

Building A Neural Network for GeoFig¶

We will start with a simple Keras model which combines one Convolution2D layer with two Dense layers. Although simple in terms of code, it is too expensive in terms of computation and hardware, as it contains 70 million parameters! This is way too much and should be avoided in general. However, we want to experiment with the common use of Dense layers and see why they are not good for image processing. In general, Dense layers should be avoided as much as possible when dealing with image data. The general practice is to use Convolution and Pooling layers. These two types of layers are explained in more detail in the following two articles, which we recommend to read before you approach the following code:

Lets Train Model 1¶

We now define our first model for the recognizing GeoFig shapes. Note that unlike the common practice, we decided to use the SReLU activation method instead of the more popular relu activation. We did several test with relu but SReLU seems to be more appropriate for GeoFig. One of the amazing facts about SReLU is that it adapts itself during the learning process and not a constant function as other activations. You may read more about it in the following papers:

nb_epoch = 100

batch_size = 32

input_shape = X_train.shape[1:]

model = Sequential(name="model_1")

model.add(Convolution2D(64, 3, 3, input_shape=input_shape))

model.add(SReLU())

model.add(Flatten())

model.add(Dense(512))

model.add(SReLU())

model.add(Dropout(0.4))

model.add(Dense(256))

model.add(SReLU())

model.add(Dropout(0.4))

model.add(Dense(nb_classes))

model.add(Activation('softmax'))

print(model.summary())

save_model_summary(model, "model_1_summary.txt")

write_file("model_1.json", model.to_json())

fmon = FitMonitor(thresh=0.09, minacc=0.999, filename="model_1_autosave.h5")

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

hist = model.fit(

X_train,

Y_train,

batch_size=batch_size,

nb_epoch=nb_epoch,

shuffle=True,

validation_data=(X_test, Y_test),

verbose=0,

callbacks = [fmon]

)

model_file = "model_1.h5"

print("Saving model to:", model_file)

model.save(model_file)

plot(model, to_file="model_1_scheme.png", show_layer_names=False, show_shapes=True)

show_scores(model, hist, X_train, Y_train, X_test, Y_test)

loss, accuracy = model.evaluate(X_train, Y_train, verbose=0)

print("Training: accuracy = %f ; loss = %f" % (accuracy, loss))

loss, accuracy = model.evaluate(X_test, Y_test, verbose=0)

print("Validation: accuracy1 = %f ; loss1 = %f" % (accuracy, loss))

Although the training accuracy is quite high (99.82% !), the overall result is not good. The 10% gap with the validation accuracy is an indication of overfitting (which is also clearly noticeable from the accuracy and loss graphs above). Our model is successful on the training set only and is no as successful for any other data.

Inspecting the output¶

Befor we search for a new model, let's take a quick look on some of the cases that our model missed. It may give us clues on the strengths and weaknesses of NN models, and what we can expect from these artificial models.

The predict_classes method is helpful for getting a vector (y_pred) of the predicted classes of model1. We should compare y_pred to the expected true classes y_test in order to get the false cases:

y_pred = model.predict_classes(X_test)

true_preds = [(x,y) for (x,y,p) in zip(X_test, y_test, y_pred) if y == p]

false_preds = [(x,y,p) for (x,y,p) in zip(X_test, y_test, y_pred) if y != p]

print("Number of valid predictions: ", len(true_preds))

print("Number of invalid predictions:", len(false_preds))

The array false_preds consists of all triples (x,y,p) where x is an image, y is its true class, and p is the false predicted value of model.

Lets visualize a sample of 15 items:

for i,(x,y,p) in enumerate(false_preds[0:15]):

plt.subplot(3, 5, i+1)

img = x.reshape(48,48)

plt.imshow(img, cmap='gray')

plt.title("%d\ny: %s\np: %s" % (i, class_name[y], class_name[p]), fontsize=9, loc='left')

plt.axis('off')

plt.subplots_adjust(wspace=0.8, hspace=0.6)

We see that our model sometimes confuses between a Wedge and an Arc in case that the Wedge angle is close to $180^\circ$ degrees. We see this in examples 3, 8, and possibly 4 (although in example 4 the angle is not so close to $180^\circ$). Sometimes when the Arc angle is very small, the model thinks it's an ellipse (like in examples 0 and 13). The confusion between a Heptagon and a Hexagon can also be understood likewise, but in all other cases there is no such explanation for the model error. Obviously we need to work harder for a better model. Could be that our training set is too small (only 8000 samples) but most probably we need to use more convolution layers.

Second Keras Model for GeoFig database¶

Lets try to add an additional Convolution2D layer and reduce the width of the Dense layers. The number of parameters is still too high (32 millions), but much less than model 1.

nb_epoch = 100

batch_size = 32

input_shape = X_train.shape[1:]

model = Sequential(name="model_2")

model.add(Convolution2D(64, 3, 3, input_shape=input_shape))

model.add(SReLU())

model.add(Convolution2D(64, 3, 3, input_shape=input_shape))

model.add(SReLU())

model.add(Flatten())

model.add(Dense(256))

model.add(SReLU())

model.add(Dropout(0.4))

model.add(Dense(64))

model.add(SReLU())

model.add(Dropout(0.4))

model.add(Dense(nb_classes))

model.add(Activation('softmax'))

print(model.summary())

save_model_summary(model, "model_2_summary.txt")

write_file("model_2.json", model.to_json())

fmon = FitMonitor(thresh=0.09, minacc=0.999, filename="model_2_autosave.h5")

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

hist = model.fit(

X_train,

Y_train,

batch_size=batch_size,

nb_epoch=nb_epoch,

shuffle=True,

validation_data=(X_test, Y_test),

verbose=0,

callbacks = [fmon]

)

model_file = "model_2.h5"

print("Saving model to:", model_file)

model.save(model_file)

plot(model, to_file="model_2_scheme.png", show_layer_names=False, show_shapes=True)

show_scores(model, hist, X_train, Y_train, X_test, Y_test)

Seems like the second Convolution layer that we added has reduced overfitting by almost 4%, but this is not good enough yet. The clear gap between the training and validation loss graph indicates that there's more room for improvement.

Validation credibility¶

Before proceeding to our third model, let's take a moment for discussing one more isue. From the two models above, we learn that training accuracy can be quite high (99.82% in model 1, and 99.95% in model 2), but we should not be impressed as we fall short in our validation sets. In some cases however we might be satisfied with what we got but would like to carry out further tests to make sure that the validation accuracy we have is not volatile. After all our validation set ("test.h5") has only 1600 samples, which might not be enough to trust in general.

Our imgutils contains a special method check_data_set for testing our model on as many samples as we wish from our large repository of samples (320K samples!). This method accepts three arguments:

- Keras model object

- HDF5 file of GeoFig images

- Number of images to sample

You may want to sample a few thousand images from each repository in order to gain confidence in your model. here are two examples of using this method which show that the validation accuracy we got is trustable:

check_data_set(model, "geofig4.h5", sample=5000)

check_data_set(model, "geofig3.h5", sample=10000)

Model 3¶

We will add a third Convolution layer, and increase the filter size to 5x5 in the first two layers. In adition, we add three new MaxPooling2D layers (one after each Convolution2D). The immediate effect of these layers is a drastic reduction in the model number of parameters from 90 million to 915K almost 1% of the size of model 1. Even if we get similar results to model 1, it would be considered a success and a proof for why Convolution and Pooling layers are the right kind of layers to use for image data.

nb_epoch = 100

batch_size = 32

input_shape = X_train.shape[1:]

model = Sequential(name="model_3")

model.add(Convolution2D(64, 5, 5, input_shape=input_shape))

model.add(SReLU())

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Convolution2D(64, 5, 5, input_shape=input_shape))

model.add(SReLU())

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Convolution2D(64, 3, 3, input_shape=input_shape))

model.add(SReLU())

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(256))

model.add(SReLU())

model.add(Dropout(0.5))

model.add(Dense(128))

model.add(SReLU())

model.add(Dropout(0.5))

model.add(Dense(nb_classes))

model.add(Activation('softmax'))

print(model.summary())

save_model_summary(model, "model_3_summary.txt")

write_file("model_3.json", model.to_json())

fmon = FitMonitor(thresh=0.09, minacc=0.999, filename="model_3_autosave.h5")

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

hist = model.fit(

X_train,

Y_train,

batch_size=batch_size,

nb_epoch=nb_epoch,

shuffle=True,

validation_data=(X_test, Y_test),

verbose=0,

callbacks = [fmon]

)

model_file = "model_3.h5"

print("Saving model to:", model_file)

model.save(model_file)

plot(model, to_file="model_3_scheme.png", show_layer_names=False, show_shapes=True)

show_scores(model, hist, X_train, Y_train, X_test, Y_test)

loss, accuracy = model.evaluate(X_train, Y_train, verbose=0)

print("Training: accuracy = %f ; loss = %f" % (accuracy, loss))

loss, accuracy = model.evaluate(X_test, Y_test, verbose=0)

print("Validation: accuracy = %f ; loss = %f" % (accuracy, loss))

This is getting better. Using convolution and pooling layers has enabled better validation accuracy (the gap has dropped to less than 3.5%). And let us mention again that our model parameters has dropped from 90M to 900K! So by all means this looks like a giant step forward. We will stop our experiments here and let you try to do better (good luck ;-). Is it possible to achieve 100% accuracy??? And if so, in what cost? We don't want too many parameters (not a fare game!), and we don't want too many layers and nuerons. After all we are dealing with a rather simple image database (simplest geometrical figures), and we want to replace old school programmers with neural networks ... :-)

You may enlarge your training and validation sets. We used only 8000 training samples. How about using 32000 training samples? You may also experiment with other activation functions and optimizers (there are plenty of them in Keras). You can also work directly in Theano or TensorFlow.

Before you proceed, lets tak a look at some examples in which model 3 fails:

y_pred = model.predict_classes(X_test)

true_preds = [(x,y) for (x,y,p) in zip(X_test, y_test, y_pred) if y == p]

false_preds = [(x,y,p) for (x,y,p) in zip(X_test, y_test, y_pred) if y != p]

print("Number of valid predictions: ", len(true_preds))

print("Number of invalid predictions:", len(false_preds))

Let's draw the first 15 failures

for i,(x,y,p) in enumerate(false_preds[0:15]):

plt.subplot(3, 5, i+1)

img = x.reshape(48,48)

plt.imshow(img, cmap='gray')

plt.title("%d\ny: %s\np: %s" % (i, class_name[y], class_name[p]), fontsize=9, loc='left')

plt.axis('off')

plt.subplots_adjust(wspace=0.8, hspace=0.6)

We now see more of the Arc/Wedge and Arc/Ellipse failures and less of the Hexagon/Heptagon failures. Due to the low 48x48 pixels resolution we may not be able to achieve 100% recognition accuracy? In example 12 above, even a trained human eye can hardly tell if this figure is an Arc or a Wedge? So this figure should be labeled as both an Arc and a a Wedge? or should we eliminate such case from the GeoFig database? To

from nbstyle import *

HTML('<style>%s</style>' % (fancy(),))