Object Recognition in CIFAR-10 Image Database¶

CIFAR-10 is by now a classical computer-vision dataset for object recognition case study. It is a subset of the 80 million tiny images dataset that was designed and created by the Canadian Institute for Advanced Research (CIFAR, pronounced "see far").

The CIFAR-10 dataset consists of 60000 32x32x3 color images in 10 equal classes, (6000 images per class). Each class of images corresponds to a physical object (automobile, cat, dog, airplane, etc). It was collected by Alex Krizhevsky, Vinod Nair, and Geoffrey Hinton. We strongly recommend looking at the following two sources before starting work on this notebook:

Prerequisites¶

The code of this IPython notebook run on Windows 10, Python 2.7 with keras, numpy, matplotlib and jupyter. We also use an NVIDIA GPU (GeForce GTX950) with cuDNN 5103. Of course, it can also be run on a CPU but it will be significantly slower (not recommended!).

To run the code in this notebook, you'll also need to download the following course libraries which we use in several examples of this course:

- http://www.samyzaf.com/cgi-bin/view_file.py?file=ML/lib/kerutils.py

- http://www.samyzaf.com/cgi-bin/view_file.py?file=ML/lib/dlutils.py

- http://www.samyzaf.com/ML/style-notebook.css (notebook stylesheet)

You can actually download all the course modules from Github:

https://github.com/samyzaf/kerutils

from keras.datasets import cifar10

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Sequential, load_model

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Convolution2D, MaxPooling2D

from keras.optimizers import SGD

from keras.constraints import maxnorm

from keras.utils import np_utils

from keras.layers.noise import GaussianNoise

from keras.layers.advanced_activations import SReLU

from keras.utils.visualize_util import plot

import pandas as pd

import matplotlib.pyplot as plt

import time, pickle

from kerutils import *

%matplotlib inline

# These are css/html styles for good looking ipython notebooks

from IPython.core.display import HTML

css = open('style-notebook.css').read()

HTML('<style>{}</style>'.format(css))

The CIFAR-10 image classes are encoded as integers 0-9 by the following Python dictionary

nb_classes = 10

class_name = {

0: 'airplane',

1: 'automobile',

2: 'bird',

3: 'cat',

4: 'deer',

5: 'dog',

6: 'frog',

7: 'horse',

8: 'ship',

9: 'truck',

}

Load training and test data¶

(X_train, y_train), (X_test, y_test) = cifar10.load_data()

y_train = y_train.reshape(y_train.shape[0]) # somehow y_train comes as a 2D nx1 matrix

y_test = y_test.reshape(y_test.shape[0])

print('X_train shape:', X_train.shape)

print(X_train.shape[0], 'training samples)'

print(X_test.shape[0], 'validation samples)'

The original data of each image is a 32x32x3 matrix of integers from 0 to 255. We need to scale it down to floats in the unit interval

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255

X_test /= 255

As usual, we must convert the y_train and y_test vectors to one-hot format:

0 → [1, 0, 0, 0, 0, 0, 0, 0, 0, 0] 1 → [0, 1, 0, 0, 0, 0, 0, 0, 0, 0] 2 → [0, 0, 1, 0, 0, 0, 0, 0, 0, 0] 3 → [0, 0, 0, 1, 0, 0, 0, 0, 0, 0] etc...

Y_train = np_utils.to_categorical(y_train, nb_classes)

Y_test = np_utils.to_categorical(y_test, nb_classes)

Let's also write two small utilities for drawing samples of images, so we can inspect our results visually.

def draw_img(i):

im = X_train[i]

c = y_train[i]

plt.imshow(im)

plt.title("Class %d (%s)" % (c, class_name[c]))

plt.axis('on')

def draw_sample(X, y, n, rows=4, cols=4, imfile=None, fontsize=12):

for i in range(0, rows*cols):

plt.subplot(rows, cols, i+1)

im = X[n+i].reshape(32,32,3)

plt.imshow(im, cmap='gnuplot2')

plt.title("{}".format(class_name[y[n+i]]), fontsize=fontsize)

plt.axis('off')

plt.subplots_adjust(wspace=0.6, hspace=0.01)

#plt.subplots_adjust(hspace=0.45, wspace=0.45)

#plt.tight_layout(pad=0.4, w_pad=0.5, h_pad=1.0)

if imfile:

plt.savefig(imfile)

Let's draw image 7 in X_train for example

draw_img(7)

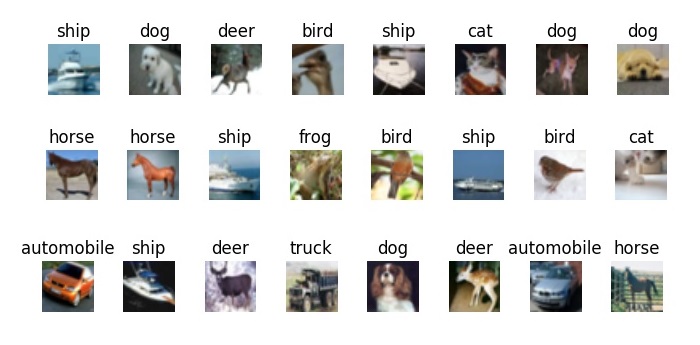

To test the second utility, let's draw the first 15 images in a 3x5 grid:

draw_sample(X_train, y_train, 0, 3, 5)

Y_train = np_utils.to_categorical(y_train, nb_classes)

Y_test = np_utils.to_categorical(y_test, nb_classes)

Building Neural Networks for CIFAR-10¶

In contrast to previous case studies, in this case it would be prohibitive to use fully connected neural network unless we have good reasons to believe that we can make good progress with a small number of neurons on layers beyond the input layer. The input layer would have to be of size 3072 (as every image is a 32x32x3 matrix). if we add a hidden layer with the same size, we'll end up with 9 milion synapses on the first floor. Adding one more layer of such size will take us to billions of synapses, which is of course impractical.

Deep learning frameworks have come up with special types of designated layers for processing images with minimal number of synapses (compared to Dense layer). Each image pixel is connected to a very small subset of pixels of size 3x3 or 5x5 in its neighborhood. Intuitively, image pixels are mostly impacted by pixels around them rather than pixels in a far away region of the image.

These two types of layers are explained in more detail in the following two articles, which we recommend to read before you approach the following code:

We will start with a small Keras model which combines a well thought mix of Convolution2D, Maxpooling2D and Dense layers. It is mostly based on open source code examples by François Chollet (author of Keras from Google) and other similar sources:

Two Types of Training¶

We will use two types of training:

- Standard training: the usual Keras fit method

- Training with augmented data: In this mode, our training data is passing through a special Keras generator which applies certain image operations on each data item and generates new items for training. This way we can multiply our training data indefinitely as much as we wish and thus provide our model with as much training as we wish (but of course we should avoid overfitting).

The Keras generator for the second training mode is called ImageDataGenerator

and can be understood from the Keras manual page:

https://keras.io/preprocessing/image/#imagedatagenerator

Lets Train Model 1 (standard training)¶

nb_epoch = 50

batch_size = 32

model1 = Sequential()

model1.add(Convolution2D(32, 3, 3, input_shape=(32, 32, 3), border_mode='same', activation='relu', W_constraint=maxnorm(3)))

model1.add(Dropout(0.2))

model1.add(Convolution2D(32, 3, 3, activation='relu', border_mode='same', W_constraint=maxnorm(3)))

model1.add(MaxPooling2D(pool_size=(2, 2)))

model1.add(Flatten())

model1.add(Dense(512, activation='relu', W_constraint=maxnorm(3)))

model1.add(Dropout(0.5))

model1.add(Dense(nb_classes, activation='softmax'))

# Compile model

lrate = 0.01

decay = lrate/nb_epoch

sgd = SGD(lr=lrate, momentum=0.9, decay=decay, nesterov=False)

model1.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

print(model1.summary())

print('Standard Training.')

h = model1.fit(

X_train,

Y_train,

batch_size=batch_size,

nb_epoch=nb_epoch,

validation_data=(X_test, Y_test),

shuffle=True

)

show_scores(model1, h, X_train, Y_train, X_test, Y_test)

print('Saving model1 to the file "model1.h5"')

model1.save("model1.h5")

loss, accuracy = model1.evaluate(X_train, Y_train, verbose=0)

print("Training: accuracy = %f ; loss = %f" % (accuracy, loss))

loss, accuracy = model1.evaluate(X_test, Y_test, verbose=0)

print("Validation: accuracy1 = %f ; loss1 = %f" % (accuracy, loss))

What we see in the last two graphs is a classic example of overfitting phenomenon. While the training accuracy has skyrocketed to 99.96% (wow!!), our validation data comes to the rescue and cools down our enthusiasm: only 70.14%. The almost 30% gap between the training data and validation data is a clear indication of overfitting, and a good reason to abandone model1 and look for a better one. We should also notice the clear big gap between the training loss and validation loss. This is also a clear mark f overfitting that should raise a warning sign.

Inspecting the output¶

Neverthelss, befor we search for a new model, let's take a quick look on some of the cases that our model1 missed. It may give us hints on the strengths an weaknesses of NN models, and what we can expect from these artificial models.

The predict_classes method is helpful for getting a vector (y_pred) of the predicted classes of model1. We should compare y_pred to the expected true classes y_test in order to get the false cases:

y_pred = model1.predict_classes(X_test)

true_preds = [(x,y) for (x,y,p) in zip(X_test, y_test, y_pred) if y == p]

false_preds = [(x,y,p) for (x,y,p) in zip(X_test, y_test, y_pred) if y != p]

print("Number of true predictions: ", len(true_preds))

print("Number of false predictions:", len(false_preds))

The array false_preds consists of all triples (x,y,p) where x is an image, y is its true class, and p is the false predicted value of model1.

Lets visualize a sample of 15 items:

for i,(x,y,p) in enumerate(false_preds[0:15]):

plt.subplot(3, 5, i+1)

plt.imshow(x, cmap='gnuplot2')

plt.title("y: %s\np: %s" % (class_name[y], class_name[p]), fontsize=9, loc='left')

plt.axis('off')

plt.subplots_adjust(wspace=0.6, hspace=0.2)

Well, we see that model1 confuses between airplanes and sheep, dogs and cats, etc. But we should not underestimate the fact that it is still correct in 70% of the cases, which is highly untrivial! (suppose that as a programmer you were assigned to write a traditional computer program that can guess the class in 70% of the case - think how hard it would be...)

Second Keras Model for the CIFAR-10 dataset¶

Lets try our small model with the aid of augmented data. The Keras ImageDataGenerator is a great tool for generating more training data from old data, so that we may have enough training and avoid overfitting.

The ImageDataGenerator takes quite a few graphic parameters which we cannot explain in this tutorial. We recommend reading the Keras documentation page and a short tutorial:

- https://keras.io/preprocessing/image/#imagedatagenerator

- http://machinelearningmastery.com/image-augmentation-deep-learning-keras/

Lets first take a look at a few samples of images that are genereated but ImageDataGenerator:

imdgen = ImageDataGenerator(

featurewise_center = False, # set input mean to 0 over the dataset

samplewise_center = False, # set each sample mean to 0

featurewise_std_normalization = False, # divide inputs by std of the dataset

samplewise_std_normalization = False, # divide each input by its std

zca_whitening = False, # apply ZCA whitening

rotation_range = 0, # randomly rotate images in the range (degrees, 0 to 180)

width_shift_range = 0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range = 0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip = True, # randomly flip images

vertical_flip = False, # randomly flip images

)

imdgen.fit(X_train)

it = imdgen.flow(X_train, Y_train, batch_size=15) # This is a Python iterator

images, categories = it.next()

print("Number of images returned by iterator:", len(images))

for i in range(15):

plt.subplot(3, 5, i+1)

im = images[i]

c = np.where(categories[i] == 1)[0][0] # convert one-hot to regular index

plt.imshow(im, cmap='gnuplot2')

plt.title(class_name[c], fontsize=9)

plt.axis('off')

plt.subplots_adjust(wspace=0.6, hspace=0.2)

The images you see are not from the CIFAR-10 collection. They were generated by Keras ImageDataGenerator from images in the CIFAR-10 database by applying various image operators on them. This way we can increase the number of training samples almost indefinitely (in every training epoch we get a completely new set of samples!)

The second important point to note about this iterator is that it does not require any memory or disk space to keep its images (no matter how many of them we want to make)! It generates them in small batches (usually 32 or 128 at a time), and they are discarded after model training. So we can train our model with millions of samples without using a memory more than 100KB (for 32 batch size) or 400KB (for 128 batch size). This is extremely important when our images are in real size (like 2048x3072).

Lets see now the second type of Keras training based on the ImageDataGenerator. Note the new training method name: fit_generator.

Model 2 (with Data Augmentation)¶

nb_epoch = 100 # This tim lets increase the number of epochs to 100

batch_size = 32

model2 = Sequential()

model2.add(Convolution2D(32, 3, 3, input_shape=(32, 32, 3), border_mode='same', activation='relu', W_constraint=maxnorm(3)))

model2.add(Dropout(0.2))

model2.add(Convolution2D(32, 3, 3, activation='relu', border_mode='same', W_constraint=maxnorm(3)))

model2.add(MaxPooling2D(pool_size=(2, 2)))

model2.add(Flatten())

model2.add(Dense(512, activation='relu', W_constraint=maxnorm(3)))

model2.add(Dropout(0.5))

model2.add(Dense(nb_classes, activation='softmax'))

# Compile model with SGD

lrate = 0.01

decay = lrate/nb_epoch

sgd = SGD(lr=lrate, momentum=0.9, decay=decay, nesterov=False)

model2.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

print(model2.summary())

print('Augmented Data Training.')

imdgen = ImageDataGenerator(

featurewise_center = False, # set input mean to 0 over the dataset

samplewise_center = False, # set each sample mean to 0

featurewise_std_normalization = False, # divide inputs by std of the dataset

samplewise_std_normalization = False, # divide each input by its std

zca_whitening = False, # apply ZCA whitening

rotation_range = 0, # randomly rotate images in the range (degrees, 0 to 180)

width_shift_range = 0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range = 0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip = True, # randomly flip images

vertical_flip = False, # randomly flip images

)

# compute quantities required for featurewise normalization

# (std, mean, and principal components if ZCA whitening is applied)

imdgen.fit(X_train)

# fit the model on the batches generated by datagen.flow()

dgen = imdgen.flow(X_train, Y_train, batch_size=batch_size)

fmon = FitMonitor(thresh=0.03, minacc=0.98) # this is from our kerutils module (see above)

h = model2.fit_generator(

dgen,

samples_per_epoch = X_train.shape[0],

nb_epoch = nb_epoch,

validation_data = (X_test, Y_test),

verbose = 0,

callbacks = [fmon]

)

show_scores(model2, h, X_train, Y_train, X_test, Y_test)

print('Saving model2 to "model2.h5"')

model2.save("model2.h5")

loss, accuracy = model2.evaluate(X_train, Y_train, verbose=0)

print("Training: accuracy = %f ; loss = %f" % (accuracy, loss))

loss, accuracy = model2.evaluate(X_test, Y_test, verbose=0)

print("Validation: accuracy = %f ; loss = %f" % (accuracy, loss))

Indeed, using convolutional layers has yielded better validation (almost 10% more than the previous model). Training accuracy has drastically dropped from 99.95% to less than 88.7%, but this is not an indication for an inferior model. On the contrary, the extreme overfitting that we got in model1 was a clear indication of model inadequacy. The overfitting that we see in model2 is not too bad, and it is better fit for practical practices than model1.

Still, 80% is not good enough (it was super exceptional in the 90's :-) and we should strive for more. Looking at the precision and loss graphs, it doesn't look like we are going to get much improvement in model2 by adding more epochs or tuning other parameters, although we encourage the students to try other optimizers and activation functions (Keras has plenty of them) if a fast GPU is available for this. Otherwise it can take a lot of time. It took us about 3 hours to run 120 epochs on a NVIDIA GeForce GTX950, so it could take days to try more epochs and different parameters, unless you have an NVIDIA TITAN or TESLA cards.

In this tutorial we will continue and experiment with our medium and big models that contain more layers and more neurons.

Model 3 (with Data Augmentation)¶

nb_epoch = 120

batch_size = 32

model3 = Sequential()

model3.add(Convolution2D(32, 3, 3, input_shape=(32, 32, 3), border_mode='same', activation='relu', W_constraint=maxnorm(3)))

model3.add(Dropout(0.2))

model3.add(Convolution2D(32, 3, 3, activation='relu', border_mode='same', W_constraint=maxnorm(3)))

model3.add(MaxPooling2D(pool_size=(2, 2)))

model3.add(Dropout(0.2))

model3.add(Convolution2D(64, 3, 3, border_mode='same'))

model3.add(Activation('relu'))

model3.add(MaxPooling2D(pool_size=(2, 2)))

model3.add(Dropout(0.25))

model3.add(Flatten())

model3.add(Dense(512, activation='relu', W_constraint=maxnorm(3)))

model3.add(Dropout(0.5))

model3.add(Dense(nb_classes, activation='softmax'))

# Compile model with SGD (Stochastic Gradient Descent)

lrate = 0.01

decay = lrate/nb_epoch

sgd = SGD(lr=lrate, momentum=0.9, decay=decay, nesterov=False)

model3.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

print(model3.summary())

print('Augmented Data Training.')

imdgen = ImageDataGenerator(

featurewise_center = False, # set input mean to 0 over the dataset

samplewise_center = False, # set each sample mean to 0

featurewise_std_normalization = False, # divide inputs by std of the dataset

samplewise_std_normalization = False, # divide each input by its std

zca_whitening = False, # apply ZCA whitening

rotation_range = 0, # randomly rotate images in the range (degrees, 0 to 180)

width_shift_range = 0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range = 0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip = True, # randomly flip images

vertical_flip = False, # randomly flip images

)

# compute quantities required for featurewise normalization

# (std, mean, and principal components if ZCA whitening is applied)

imdgen.fit(X_train)

# fit the model on the batches generated by datagen.flow()

dgen = imdgen.flow(X_train, Y_train, batch_size=batch_size)

fmon = FitMonitor(thresh=0.03, minacc=0.99) # this is from our kerutils module (see above)

h = model3.fit_generator(

dgen,

samples_per_epoch = X_train.shape[0],

nb_epoch = nb_epoch,

validation_data = (X_test, Y_test),

verbose = 0,

callbacks = [fmon]

)

show_scores(model3, h, X_train, Y_train, X_test, Y_test)

print('Saving model3 to "model3.h5"')

model3.save("model3.h5")

print('Saving history dict to pickle file: hist3.p')

with open('hist3.p', 'wb') as f:

pickle.dump(h.history, f)

loss, accuracy = model3.evaluate(X_train, Y_train, verbose=0)

print("Training: accuracy = %f ; loss = %f" % (accuracy, loss))

loss, accuracy = model3.evaluate(X_test, Y_test, verbose=0)

print("Validation: accuracy = %f ; loss = %f" % (accuracy, loss))

Model3 size: 2.13M. Training accuracy raised to 90%, and validation accuracy 83.7%. There's still a 7% overfitting gap, and 83.7% validation is still not high enough. Lets try harder.

Model 4 (with Data Augmentation)¶

nb_epoch = 400

batch_size = 32

model4 = Sequential()

model4.add(Convolution2D(32, 3, 3, input_shape=(32, 32, 3), border_mode='same', activation='relu'))

model4.add(Dropout(0.2))

model4.add(Convolution2D(32, 3, 3, activation='relu', border_mode='same'))

model4.add(MaxPooling2D(pool_size=(2, 2)))

model4.add(Dropout(0.2))

model4.add(Convolution2D(64, 3, 3, border_mode='same'))

model4.add(Activation('relu'))

model4.add(Convolution2D(64, 3, 3))

model4.add(Activation('relu'))

model4.add(MaxPooling2D(pool_size=(2, 2)))

model4.add(Dropout(0.25))

model4.add(Flatten())

model4.add(Dense(512, activation='relu', W_constraint=maxnorm(3)))

model4.add(Dropout(0.25))

model4.add(Dense(nb_classes, activation='softmax'))

# Compile model with SGD (Stochastic Gradient Descent)

lrate = 0.01

decay = lrate/nb_epoch

sgd = SGD(lr=lrate, momentum=0.9, decay=decay, nesterov=True)

model4.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

print(model4.summary())

print('Augmented Data Training.')

imdgen = ImageDataGenerator(

featurewise_center = False, # set input mean to 0 over the dataset

samplewise_center = False, # set each sample mean to 0

featurewise_std_normalization = False, # divide inputs by std of the dataset

samplewise_std_normalization = False, # divide each input by its std

zca_whitening = False, # apply ZCA whitening

rotation_range = 0, # randomly rotate images in the range (degrees, 0 to 180)

width_shift_range = 0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range = 0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip = True, # randomly flip images

vertical_flip = False, # randomly flip images

)

# compute quantities required for featurewise normalization

# (std, mean, and principal components if ZCA whitening is applied)

imdgen.fit(X_train)

# fit the model on the batches generated by datagen.flow()

dgen = imdgen.flow(X_train, Y_train, batch_size=batch_size)

fmon = FitMonitor(thresh=0.03, minacc=0.99) # this is from our kerutils module (see above)

h = model4.fit_generator(

dgen,

samples_per_epoch = X_train.shape[0],

nb_epoch = nb_epoch,

validation_data = (X_test, Y_test),

verbose = 0,

callbacks = [fmon]

)

show_scores(model4, h, X_train, Y_train, X_test, Y_test)

print('Saving model4 to "model4.h5"')

model4.save("model4.h5")

print('Saving history dict to pickle file: hist4.p')

with open('hist4.p', 'wb') as f:

pickle.dump(h.history, f)

loss, accuracy = model4.evaluate(X_train, Y_train, verbose=0)

print("Training: accuracy = %f ; loss = %f" % (accuracy, loss))

loss, accuracy = model4.evaluate(X_test, Y_test, verbose=0)

print("Validation: accuracy = %f ; loss = %f" % (accuracy, loss))

Looks like we did it again: validation accuracy has risen to 88% but training accuracy is too high (99.77%) which is an indication of over fitting, although not on the same scale as in model1 (30% gap).

We will take advantage of this situation to demonstrate how you can continue to train a model from the last training point (it took 2.15 hours to reach the last model state).

To be safe we'll just copy model4 to model5 by simply loading the save file of model4 to a new model. Then we will train model5 exactly as above. We will give it extra 100 epochs and see where it will get us.

Model 5¶

Let's first copy model4 to model5:

model5 = load_model("model4.h5")

Just for assurance, let's compute the training accuracy of model5 and see if we get the same scores as for model4

loss, accuracy = model5.evaluate(X_train, Y_train, verbose=0)

print("Training: accuracy = %f ; loss = %f" % (accuracy, loss))

loss, accuracy = model5.evaluate(X_test, Y_test, verbose=0)

print("Validation: accuracy = %f ; loss = %f" % (accuracy, loss))

Looks good. Lets tray to train model5 with additional samples generated by Keras ImageDataGenerator. This time we'll add a small rotation angle of 5 degrees, and set the shift ranges to 0.05. We will also try a larger batch_size of 64 samples.

lrate = 0.01

decay = 1e-6

sgd = SGD(lr=lrate, momentum=0.9, decay=decay, nesterov=False)

model5.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

loss, accuracy = model5.evaluate(X_test, Y_test, verbose=0)

print("Validation: accuracy = %f ; loss = %f" % (accuracy, loss))

nb_epoch = 100

batch_size = 24

print('Post Training')

imdgen = ImageDataGenerator(

featurewise_center = False, # set input mean to 0 over the dataset

samplewise_center = False, # set each sample mean to 0

featurewise_std_normalization = False, # divide inputs by std of the dataset

samplewise_std_normalization = False, # divide each input by its std

zca_whitening = False, # apply ZCA whitening

rotation_range = 7, # randomly rotate images in the range (degrees, 0 to 180)

width_shift_range = 0.05, # randomly shift images horizontally (fraction of total width)

height_shift_range = 0.05, # randomly shift images vertically (fraction of total height)

horizontal_flip = True, # randomly flip images

vertical_flip = False, # randomly flip images

)

# compute quantities required for featurewise normalization

# (std, mean, and principal components if ZCA whitening is applied)

imdgen.fit(X_train)

# fit the model on the batches generated by datagen.flow()

dgen = imdgen.flow(X_train, Y_train, batch_size=batch_size)

fmon = FitMonitor(thresh=0.03, minacc=0.99) # this is from our kerutils module (see above)

h = model5.fit_generator(

dgen,

samples_per_epoch = X_train.shape[0],

nb_epoch = nb_epoch,

validation_data = (X_test, Y_test),

verbose = 0,

callbacks = [fmon]

)

show_scores(model5, h, X_train, Y_train, X_test, Y_test)

print('Saving model5 to "model5.h5"')

loss, accuracy = model5.evaluate(X_train, Y_train, verbose=0)

print("Training: accuracy = %f ; loss = %f" % (accuracy, loss))

loss, accuracy = model5.evaluate(X_test, Y_test, verbose=0)

print("Validation: accuracy = %f ; loss = %f" % (accuracy, loss))

We lost our patience after 70 epochs. The graph suggests that if we wait long enough we may cross the 90% barrier. Let's try instead another type of activation function SReLU (S-shaped Rectified Linear Unit).

Model 6¶

SReLU is an intriguing activation function as it has four learnable parameters that are tuned during the training process. Here is the main paper on this function: https://arxiv.org/pdf/1512.07030.pdf

from keras.layers.noise import GaussianNoise

from keras.layers.advanced_activations import SReLU

batch_size = 64

nb_epoch = 400

nb_filters = 32

# size of pooling area for max pooling

nb_pool = 2

# convolution kernel size

nb_conv = 3

model6 = Sequential()

# noise input

percent_noise = 0.1

noise = (1.0/255) * percent_noise

model6.add(GaussianNoise(noise, input_shape=(32,32,3)))

model6.add(Convolution2D(2*nb_filters, nb_conv, nb_conv))

model6.add(SReLU())

model6.add(Convolution2D(nb_filters, nb_conv, nb_conv))

model6.add(SReLU())

model6.add(Convolution2D(2*nb_filters, nb_conv, nb_conv))

model6.add(SReLU())

model6.add(Convolution2D(nb_filters, nb_conv, nb_conv))

model6.add(SReLU())

model6.add(MaxPooling2D(pool_size=(nb_pool, nb_pool)))

model6.add(Dropout(0.25))

model6.add(Flatten())

#99.51 0.0, 0.0

model6.add(Dense(512))

model6.add(SReLU())

model6.add(Dropout(0.25))

model6.add(Dense(512))

model6.add(SReLU())

model6.add(Dropout(0.25))

model6.add(Dense(nb_classes))

model6.add(Activation('softmax'))

# Compile model with SGD (Stochastic Gradient Descent)

lrate = 0.01

decay = lrate/nb_epoch

sgd = SGD(lr=lrate, momentum=0.9, decay=decay, nesterov=False)

model6.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

#model6.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

imdgen = ImageDataGenerator(

featurewise_center = False, # set input mean to 0 over the dataset

samplewise_center = False, # set each sample mean to 0

featurewise_std_normalization = False, # divide inputs by std of the dataset

samplewise_std_normalization = False, # divide each input by its std

zca_whitening = False, # apply ZCA whitening

rotation_range = 4, # randomly rotate images in the range (degrees, 0 to 180)

width_shift_range = 0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range = 0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip = True, # randomly flip images

vertical_flip = False, # randomly flip images

)

# compute quantities required for featurewise normalization

# (std, mean, and principal components if ZCA whitening is applied)

imdgen.fit(X_train)

# fit the model on the batches generated by datagen.flow()

dgen = imdgen.flow(X_train, Y_train, batch_size=batch_size)

fmon = FitMonitor(thresh=0.03, minacc=0.99) # this is from our kerutils module (see above)

h = model6.fit_generator(

dgen,

samples_per_epoch = X_train.shape[0],

nb_epoch = nb_epoch,

validation_data = (X_test, Y_test),

verbose = 1,

callbacks = [fmon]

)

show_scores(model6, h, X_train, Y_train, X_test, Y_test)

print('Saving model6 to "model6.h5"')

model6.save("model6.h5")

plot(model6, to_file='model6.png', show_layer_names=False, show_shapes=True)

print('Saving history dict to pickle file: hist6.p')

with open('hist6.p', 'wb') as f:

pickle.dump(h.history, f)

loss, accuracy = model6.evaluate(X_train, Y_train, verbose=0)

print("Training: accuracy = %f ; loss = %f" % (accuracy, loss))

loss, accuracy = model6.evaluate(X_test, Y_test, verbose=0)

print("Validation: accuracy = %f ; loss = %f" % (accuracy, loss))

Indeed, the SReLU activation has raised training accuracy to 99.91%, but validation accuracy (87.3%) is still a long way behind. Overcoming overfitting still remains an interesting challenge? or do we need more training samples? There are 80 nillion more samples in the CIFAR database, so it can be tried ...

Project¶

Find photos of dogs, cats, and automobiles, on the internet or in your personnal photo albums,

and see if our models are recognizing them as such.

You will have to rescale your pictures to fit the model input shape.

Take a look at to see how you can feed them to one of the models that we built in this tutorial.

https://blog.rescale.com/neural-networks-using-keras-on-rescale/

Here is a simple minded way to do it (but read scipy manual to understand more):

import numpy as np

from scipy.misc import imread, imresize

from keras.models import load_model

def rescale_image(image_file):

im = imresize(imread(image_file, 0, 'RGB'), (32, 32, 3))

return im

def load_and_scale_imgs(img_files):

imgs = [rescale_image(img_file)) for img_file in img_files]

return np.array(imgs)

model = load_model("model5.h5") # This the last model we saved

predictions = model.predict_classes(imgs)

for image_file,pred in zip(image_ficlass_nameedictions):

print("%s: %s" % (image_file, class_name[pred]))